Confused search engines and spliited link equity along with surfaced incorrect URLs can all be a consequence of duplicate content. Learn faster and more efficient ways of finding duplicate content with better signal consolidating using rel=canonical, 301 redirects, and improved URL hygiene.

When you dive into search engine optimization—or SEO for short—you’ll quickly notice how duplicate content stands out. It sounds techy, but it can quietly undo the hard work you’ve put into your website. As a result, marketers keep scratching their heads as rank sits still and traffic dips. This guide pulls back the curtain. We’ll explain what duplicate content is, why it happens, and how it affects your search rank. By the end, you’ll be ready to spot duplicates and remove them for good.

What Is Duplicate Content?

Definition and Scope

Put simply, duplicate content appears when large chunks of text show up on two or more web pages that look almost or exactly alike. Because the same block of writing lives at different web addresses, search engines get confused about which is the “real” page. This can happen within one website (internal duplication) or between different websites (external duplication).

Picture a giant library where one book sits under three catalog codes. A visitor asks for the title, and the librarian freezes, unsure which entry to trust. That’s the headache Google faces. Every page’s URL acts like a barcode. When the same page is published on three URLs, the engine must pick one. Otherwise, it risks recommending three copies. Consequently, other unintentional SEO issues pile up.

Google’s Response to Duplicate Content

The biggest rumor circling webmasters is a “duplicate content penalty.” Many fear that if robots notice several similar pages, the whole site will crash in rankings or vanish from the index.

Let’s set the record straight: the “duplicate content penalty” is mostly a myth. Google says that unless the same text is used to trick the system, it won’t punish your site. Practices that trip this wire include scraping someone else’s work, cloning it on your site, or spinning up near-identical pages just to grab clicks. In practice, most duplicates are accidents—think auto-generated product pages or printer-friendly versions—so a formal “penalty” isn’t your biggest worry.

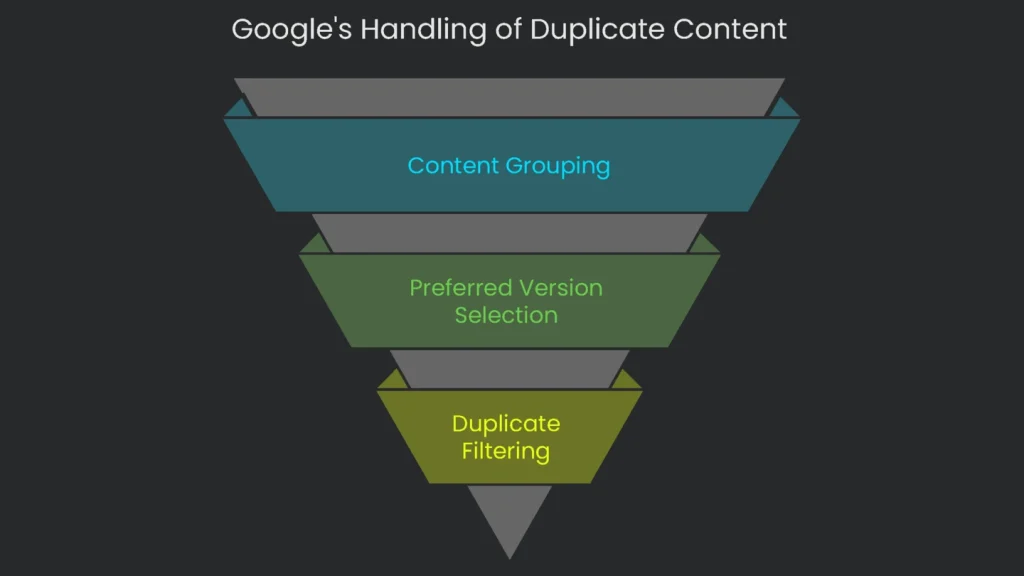

The real issue is how Google sifts content. When bots discover multiple URLs with the same text, they don’t punish them. Instead, they group them, identify a “preferred” version—usually the one with stronger signals like backlinks or engagement—and rank that one. Duplicates get filtered out automatically. Consequently, you notice traffic drops, diluted authority, and the wrong page appearing in results. So, while no label says “penalty,” the impact feels similar. Therefore, tidy your site structure or implement correct canonical tags. Clean up confusion, and your most important pages can finally earn the visibility they deserve.

Why Duplicate Content Happens

Most duplicate content appears by mistake. It usually stems from how websites and their tools (Content Management Systems, or CMS) work. Developers and search engines view websites differently. A developer may pull a page by an internal ID, while a search engine indexes a page by its full URL. As a result, tiny URL differences can create the same page twice.

URL Variations Causing Duplication

The most common cause is a single article being reachable by more than one URL. A search engine treats every unique URL as a different page, even when differences look tiny to us.

- HTTP vs. HTTPS: Many sites adopt HTTPS for security. However, if older HTTP pages don’t redirect, every page appears twice: once as

http://www.example.comand again ashttps://www.example.com. For search engines, that is the same content at two addresses. - WWW vs. non-WWW: A site can work with or without “www.” When both

https://www.example.comandhttps://example.comload without redirecting, Google sees two identical copies. This spreads page rank across both versions. Therefore, pick one and redirect the other consistently. - Trailing Slashes: A trailing slash can split URLs. For example,

https://example.com/page/andhttps://example.com/pagelook similar, yet search engines treat them as different. If both are live, they compete for the same queries. - URL Capitalization: Google notices case. That means

https://example.com/Our-Servicesandhttps://example.com/our-servicescan become two pages if both exist. Consequently, standardize link casing across your site. - Session IDs and Tracking Parameters: Parameters like

https://example.com/page?sessionid=789help with tracking. However, every unique string can create overlapping copies of the same content. Try to keep parameters out of the index.

Whenever you shop online—like when you keep items in a cart—the site may start a “session.” Some platforms attach a Session ID to the URL, such as https://example.com/product?sessionid=12345. Because each visit generates a new ID, one page can produce a sea of nearly identical URLs.

Now let’s talk promo links. When you launch a campaign, you might add UTM tags, turning https://example.com/landing-page into https://example.com/landing-page?utm_source=newsletter. To humans, they look the same. However, search engines treat each as a new page. As a result, visits and shares scatter across versions, which weakens the page’s SEO signals.

Platform and Structural Issues

Website management tools can open the door for ghost pages when configuration is off.

- Print-Friendly and PDF Copies: Many systems generate “clean” print pages. If search engines index them, you end up with a perfect copy. PDF versions can create the same risk.

- Comment Page Overflow: Some systems, like WordPress, split long discussions into

/article/comment-page-1/and/article/comment-page-2/. Each page gets a fresh URL, but the main article text repeats. Consequently, duplication risk rises.

E-commerce sites are naturally prone to duplicates because their layouts are intricate. Tools that help shoppers can, unintentionally, trip up search engines.

- Faceted Navigation: Filtering by color, size, or brand often appends parameters like

?color=blue&size=large. A few clicks can generate countless URLs with minor differences. Consequently, crawlers waste time on low-value pages. - Product Variations: Imagine a shirt with ten colors and five sizes. If each combo gets its own link, you now have fifty near-identical pages competing for the same queries.

- External Causes: Other sites may scrape or syndicate product info. When the same specs appear in multiple places, search engines face more confusion. Therefore, ensure each product resolves to one clear URL.

- Content Syndication: Syndicating posts can expand reach. However, if a partner’s copy gets indexed first, it may outrank your original. As a result, you risk losing traffic you already earned.

- Content Scraping: Scraping is sneakier. Bots copy your article and post it on a low-quality site. Most engines usually identify the original later. However, short-term damage still happens as users hit the clone. Your credibility can suffer.

How Duplicates Harm SEO

Why It Matters

When the same article appears more than once in search, the ripple effects hurt performance. Links split strength, metrics skew, and momentum stalls. Several of the more serious results connect and amplify one another.

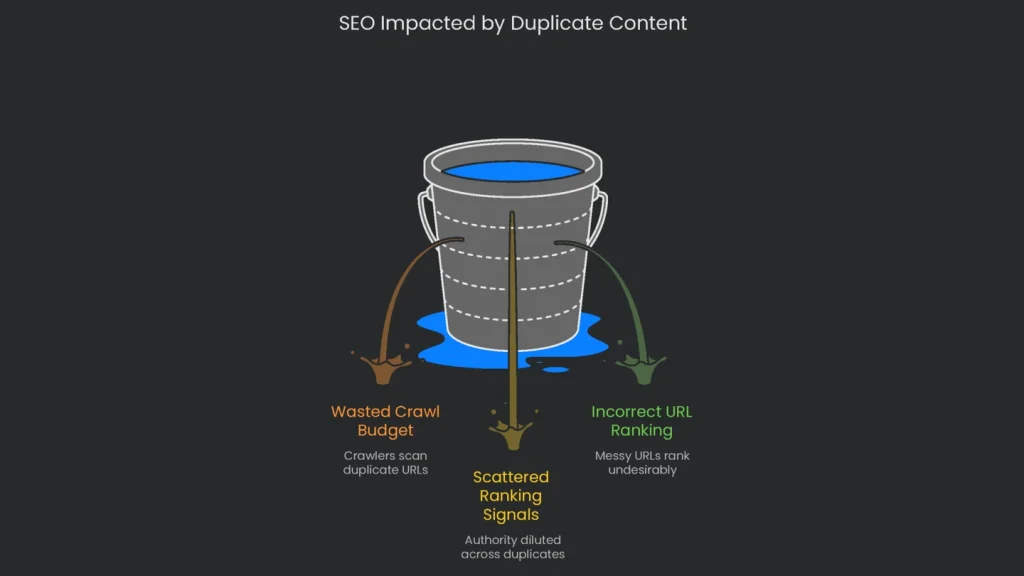

Wasted Crawl Budget

Search engines can only crawl a limited number of pages on your site. This allowance is the crawl budget. When thousands of duplicate URLs exist, crawlers spend time on copies instead of new posts or key product pages. On large sites, crucial pages may get crawled less often—or missed.

The crawl waste isn’t just a budget problem; it muddies ranking signals. Authority—especially from backlinks—should land on one optimized page. Duplicates scatter that authority across many URLs. One link hits the non-secure version, another the trailing-slash version, and a third the mixed-case URL. Consequently, signals conflict, and the best page may not rank.

Google has noted that if it can’t spot all duplicates, it can’t merge their signals. As a result, rank signals remain scattered and weaker across the board. Therefore, consolidation is essential.

Incorrect URL Ranking

Often, the worst URL ends up in results. The algorithm gathers signals and settles on a version it thinks matters most—even if a site owner would never choose it. For example, a shopper might see https://example.com/shoes/running?sessionid=xyz&sort=price instead of the clean https://example.com/shoes/running. People hesitate to click messy URLs. That visible mess reflects deeper structural issues.

How to Fix Duplicate Content

Choose the Right Fix

Finding duplicates is step one. Next, choose the right fix to tidy your site and boost ranking. Various tools can help. However, match the fix to the exact problem.

The Canonical Tag

A rel="canonical" tag is the go-to tool against duplicates. It’s a tiny line of HTML in the <head>. You tell search engines, “This is the page to remember.” Think of it as a polite suggestion. Engines usually listen and merge link equity and ranking signals onto that URL.

Whenever a page duplicates another, add this code in the duplicate’s head to indicate the preferred page:

<link rel=”canonical” href=”https://example.com/preferred-master-page” />

Set it up correctly:

- Mention the Whole URL: Always include

https://. Shorthand paths (like/preferred-master-page) can confuse crawlers. - Point Back to Itself: Each page should self-canonicalize. Example:

https://example.com/page-ashould have<link rel="canonical" href="https://example.com/page-a" />. This guards against unseen URL variants. - Tell the Syndication Boss: If another site republishes your article, ask them to add a canonical pointing to your original. Consequently, ranking signals flow back to your page.

- Avoid Simple Slip-ups: Don’t make page two of a series point to page one. Each page needs its own self-referential canonical. Also, use only one canonical per page to avoid confusion.

301 Redirects

Think of a canonical as a polite request and a 301 redirect as a firm order. A 301 tells browsers and bots to use a new URL. It is the strongest fix for duplicate pages you do not want live at all. All variants point to one link, maximizing ranking signals.

Only redirect pages that never needed to be seen:

- Send anyone reaching the HTTP version to the HTTPS version.

- Redirect non-WWW to WWW—or the reverse—consistently.

- Unify URLs so a trailing slash never creates duplicates.

A 301 moves almost all link equity from the old address to the new page. Consequently, signals consolidate, and search engines drop the old URL from the index.

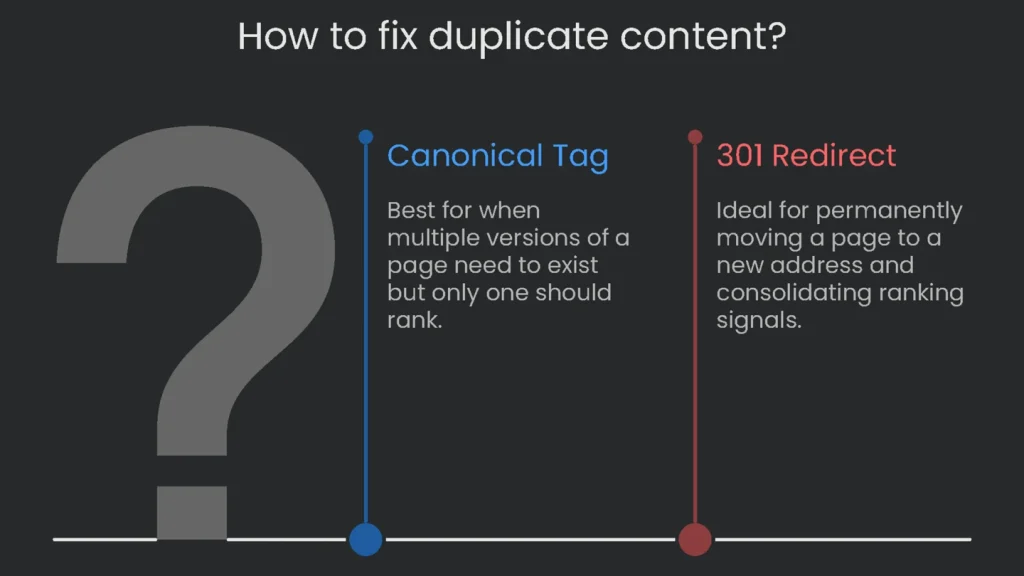

Canonical Tag vs. 301 Redirect

Choosing between a canonical and a 301 can be tricky. In short, use a 301 when you want to remove an extra page and funnel visitors to one address for good. Use rel="canonical" when several versions must remain for tracking or user choice, but you want only one to rank. See the table below for a quick comparison.

| Feature | rel="canonical" Tag | 301 Redirect |

| Nature | An HTML tag (a “hint” for bots) | A server code (a “signal” for browsers and robots) |

| Purpose | Points to the main page among live copies | Permanently moves a page to a new address |

| User Experience | Visitors stay on the duplicate page; the switch is silent | Visitors are sent to the main page instantly |

| Search Engine Behavior | Engines collect signals and choose a single “main” version to show. | Engines move authority to the target and remove the duplicate from the index. |

| Best Use Case | Best for messy URL parameters and when content is syndicated elsewhere. | Best for site-wide moves (HTTP→HTTPS), WWW vs. non-WWW, or domain changes. |

A guide comparing canonical tags to 301 redirects is worth a read if you want to master the subject.

A Note on Google Search Console Tools

In the past, site owners used settings in Google Search Console, like “Preferred Domain” and “URL Parameter,” to manage duplicates. Both options are now retired. Today, decisions rely on page content and other signals, and Google’s crawlers are better at spotting the true URL. Therefore, the onus is on site owners. You need solid 301 redirects and a prevention plan. If you handle these, the engine will focus on the page you prefer without extra back-end tinkering.

Conclusion

Key Takeaways

Duplicate content rarely triggers a formal penalty, but it is a common technical SEO issue. It sneaks in through normal site quirks, such as URL parameters or user features that ignore crawler behavior. When engines find the same content in many places, they pick one and sideline the rest. Consequently, crawl time gets wasted, signals split, and the wrong URL can surface.

Next Steps

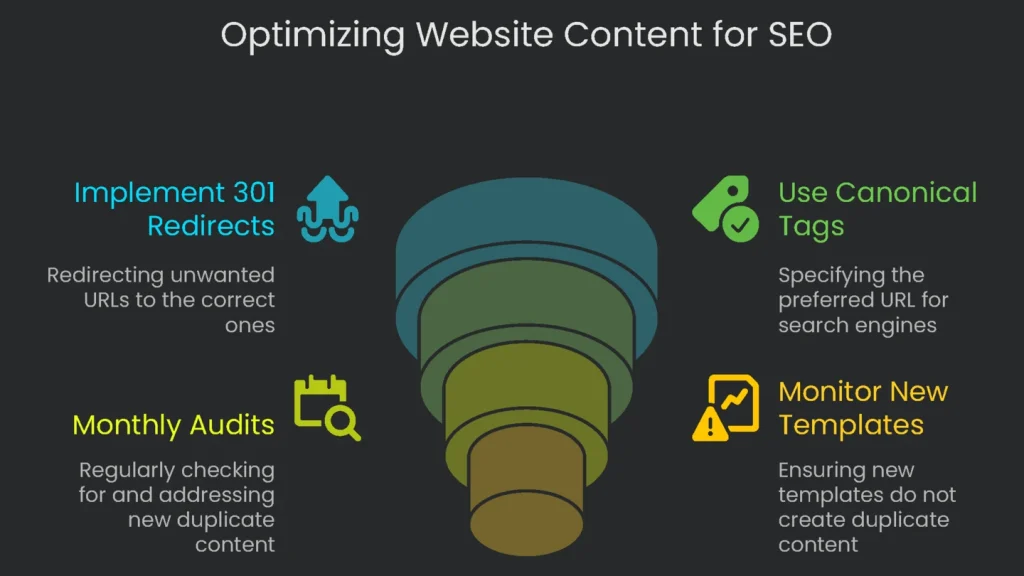

Cleaning this up is a must-have task on every tech SEO checklist. If a URL version does not belong, a 301 redirect removes it for good. If you must keep variants—such as for faceted navigation or languages—use a rel="canonical" link to point search engines to the “real” page.

Ongoing Maintenance

Think of your site like a library. If the same book sits everywhere, the librarian—our search engines—misses your best copy. This is not a one-time cleanup. Therefore, audit monthly, consolidate URLs, and monitor new templates. At Technicalseoservice, we track down duplicates, remove them, and guide crawlers so the true winners reach the top shelves where searchers—and traffic—can’t miss them.

Implementation steps

- Launch another crawl to cluster nearly-duplicate pages by title, hash, or layout.

- Pick a single canonical URL for each cluster and 301 redirect the duplicate URLs to this target.

- On all indexable pages add a self-canonical; for syndicated content, link to the version on the original domain.

- Normalize URLs (enforce HTTPS, www, trailing slashes, all lowercase) and tidy up your internal links.

- Monitor Search Console Coverage, recrawl the site, and check that the primary URL is ranked and visible only the preferred status

Frequently Asked Questions

What counts as duplicate content?

Identical or nearly identical stuff reachable at different URLs, on the same site or another.

Is there a duplicate content penalty?

Not really—there isn’t a hard penalty, but the search engine can split signals and rank the wrong page. Best to merge the content instead.

How do I fix duplicates?

If one version is the main one, set a 301 redirect. If all versions need to stay, use a rel=canonical tag on the others.

Do tracking parameters like UTM create trouble?

Yep. Apply a canonical tag and avoid putting tracking parameters in links you want search engines to index.

How do I spot duplicates?

Crawl the site, check coverage reports in Google Search Console, and look at server logs for runaway parameters.